Scraping Google SERP with Geolocation

Scraping Google SERP with Geolocation

Google Search Engine Result Pages (SERP) 🔍 are an important source of information for many businesses. SERP includes organic search results, ads, products, places, knowledge graphs, etc. For example, by scraping Google SERP you can learn which brands have paid ads for specific keywords or analyze how your website is positioned.

One of the most important factors affecting what the user can see on a keyword's SERP is geolocation 🗺. We'll show you how to scrape Google SERP for a keyword from any location, regardless of the origin of your IP address. This can be done simply by setting search URL query parameters. It's also possible to parametrize and automate scraping 🤖 using a python script we share below.

Google Search URL

Let's start with a basic Google search query for a keyword.

The URL for Google search is https://www.google.com/search and the keyword is provided in q query parameter.

The keyword must be URL encoded.

An example URL for kitchen sink keyword is https://www.google.com/search?q=kitchen+sink.

Geolocation

To add geolocation to the search URL, you just have to add two query parameters:

uulefor specific location andglfor country.

The URL for kitchen sink keyword results from the United States would be: https://www.google.com/search?q=kitchen+sink&uule=w+CAIQICINVW5pdGVkIFN0YXRlcw&gl=us.

A few examples for other countries and locations:

- India: https://www.google.com/search?q=kitchen+sink&uule=w+CAIQICIFSW5kaWE&gl=in

- Brazil: https://www.google.com/search?q=kitchen+sink&uule=w+CAIQICIGQnJhemls&gl=br

- Germany: https://www.google.com/search?q=kitchen+sink&uule=w+CAIQICIHR2VybWFueQ&gl=de

- California, United States: https://www.google.com/search?q=kitchen+sink&uule=w+CAIQICIYQ2FsaWZvcm5pYSxVbml0ZWQgU3RhdGVz&gl=us

- New York City, United States: https://www.google.com/search?q=kitchen+sink&uule=w+CAIQICIfTmV3IFlvcmssTmV3IFlvcmssVW5pdGVkIFN0YXRlcw&gl=us

You can confirm that the results are from the desired location in the footer.

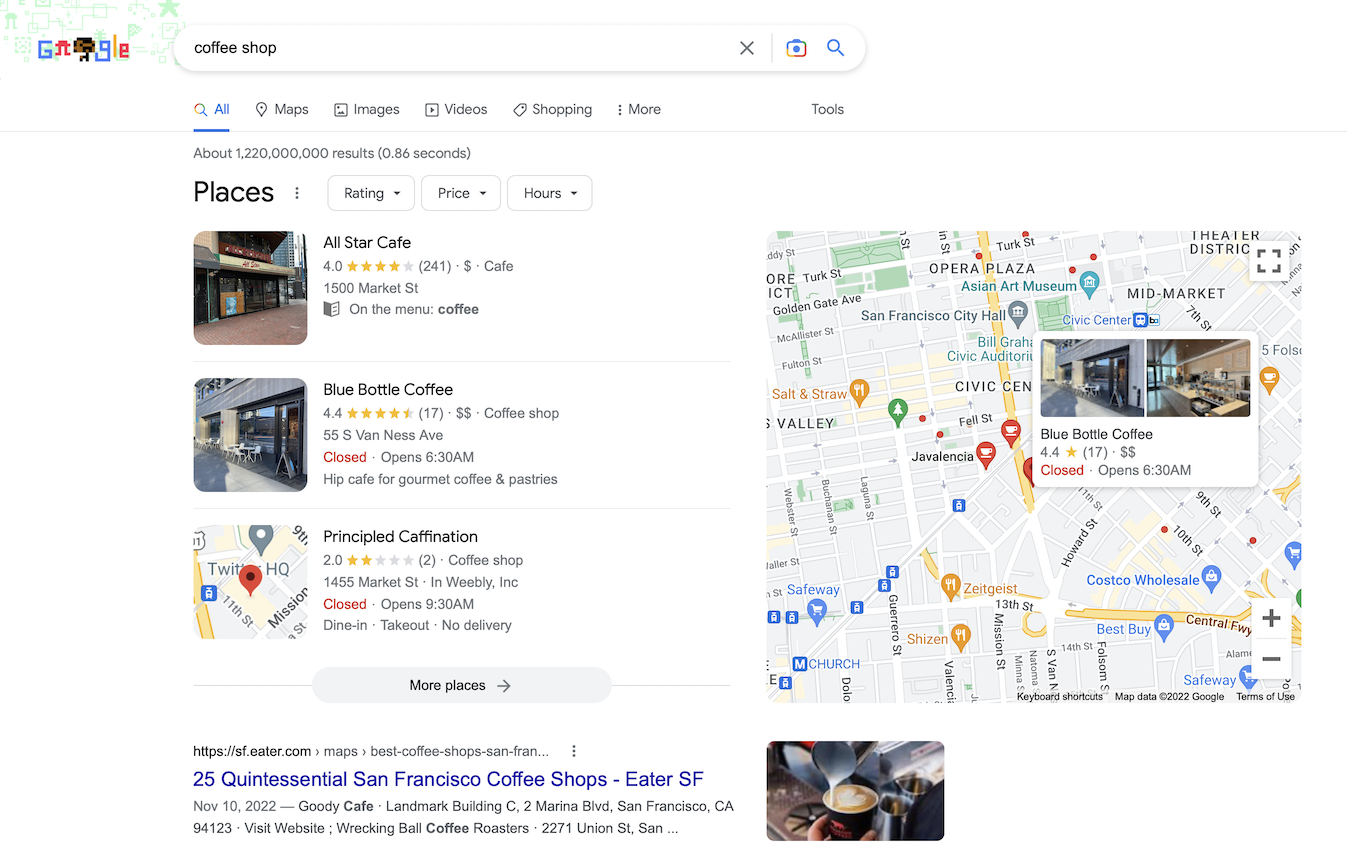

Using this method, you can even find local businesses that appear in Google SERP for a specific location. For example, coffee shops in San Francisco, California, United States: https://www.google.com/search?q=coffee+shop&uule=w+CAIQICImU2FuIEZyYW5jaXNjbyxDYWxpZm9ybmlhLFVuaXRlZCBTdGF0ZXM&gl=us.

For the URL above you will see results as if you were in San Francisco, CA regardless of your actual location (country origin of your IP address).

Generate values for geolocation query params

Now, how do you know what value to set for uule and gl query parameters?

The gl param is fairly straightforward as it corresponds to country code.

Here is the full list of all available countries: https://developers.google.com/custom-search/docs/xml_results_appendices#countryCodes.

The uule param is more tricky.

To manually generate uule for desired location, you can use this online tool: https://site-analyzer.pro/services-seo/uule/.

A list of all available locations is provided in this CSV file in Canonical Name column: https://developers.google.com/static/adwords/api/docs/appendix/geo/geotargets-2022-09-14.csv.

There's a fairly simple algorithm behind generating uule parameter. It

involves a fixed prefix, a special key which depends on location string

length, and base64 encoding. If you're interested, here is a blog post from

MOZ (a SEO tool) which describes it in detail:

https://moz.com/blog/geolocation-the-ultimate-tip-to-emulate-local-search.

If you need to automate uule parameter generation, there's a python package for this: https://github.com/ogun/uule_grabber.

It generates uule value for you given the location string.

Example usage:

import uule_grabber

print(uule_grabber.uule("Lezigne,Pays de la Loire,France"))

We will use it later in a script to automate Google SERP web scraping with geolocation.

Result page language

The result page language (host language) is another parameter which might be relevant.

You set the language in hl query param.

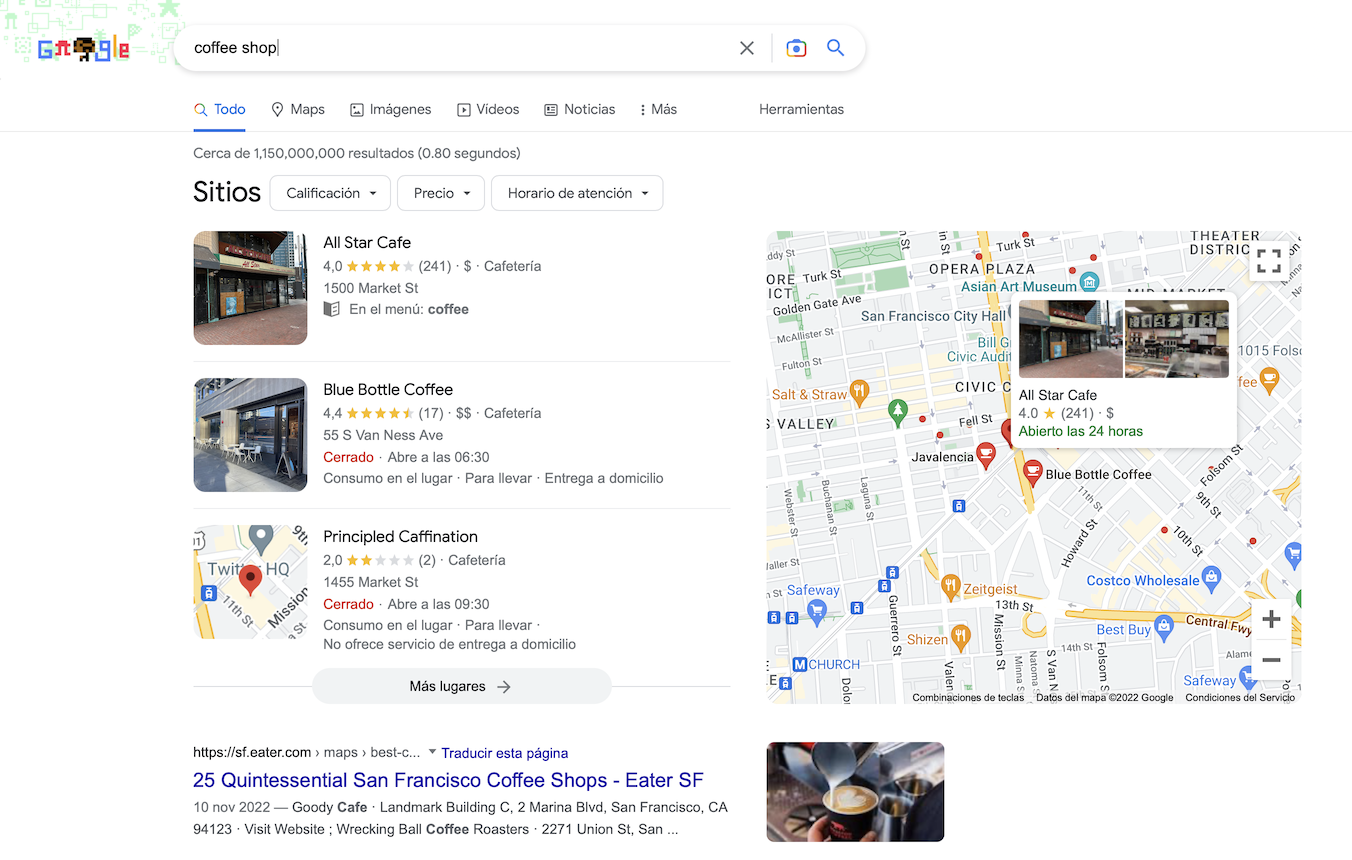

For example, the URL for coffee shops in San Francisco in Spanish would be: https://www.google.com/search?q=coffee+shop&uule=w+CAIQICImU2FuIEZyYW5jaXNjbyxDYWxpZm9ybmlhLFVuaXRlZCBTdGF0ZXM&gl=us**&hl=es**.

This parameter only affects text displayed by Google on its result page. If

you search for an english keyword and the result link is in English, Google

will not translate the result description (text snippet you see under the

link) if you set a different language in the hl parameter.

Here is the full list of all supported language codes: https://developers.google.com/custom-search/docs/xml_results_appendices#interfaceLanguages.

Python code for automated scraping

Localization of Google SERP is very useful in web scraping and SEO analytics.

Below we provide python code which scrapes Google SERP for given keyword with geolocation.

To run it, you have to install dependencies: pip install requests uule_grabber

from urllib.parse import quote_plus

import requests

import uule_grabber

def main(api_key, keyword, location, country, language, output):

# encode search query

keyword = quote_plus(keyword)

# grab `uule` based for location string

uule = uule_grabber.uule(location)

# full url for desired Google SERP

search_url = f"https://www.google.com/search?q={keyword}&uule={uule}&gl={country}&hl={language}"

# Scraping Fish API url

url_prefix = f"https://api.scrapingfish.com/api/v1/?api_key={api_key}&url="

# send GET request

response = requests.get(f"{url_prefix}{quote_plus(search_url)}", timeout=90)

with open(output, "wb") as f:

# save response to file

f.write(response.content)

print(f"Result saved to {output}")

main(

api_key="YOUR SCRAPING FISH API KEY", # https://scrapingfish.com/buy

keyword="coffee shop",

location="California,United States",

country="us",

language="en",

output="./google.html",

)

A script with command-line interface is shared on our GitHub repository

To run it, you will need Scraping Fish API key which you can get here: https://scrapingfish.com/buy

The script uses Scraping Fish API to ensure that you always get relevant SERP content since making too many requests to Google might result in captcha.

Scraping Fish is an API for web scraping powered by ethically-sourced mobile proxies.

Conclusion

Google SERP is an important source of information for market analysis, local businesses, and SEO in general. In this blog post, we showed how you can see Google SERP from arbitrary location without using VPN or proxies located in specific countries.

We have also shared a python code snippet and a script which will let you automate Google SERP web scraping at scale using Scraping Fish API.

Let's talk about your use case 💼

Feel free to reach out using our contact form. We can assist you in integrating Scraping Fish API into your existing web scraping workflow or help you set up a scraping system for your use case.